Define and differentiate features and technologies present in Acropolis, Prism and Calm

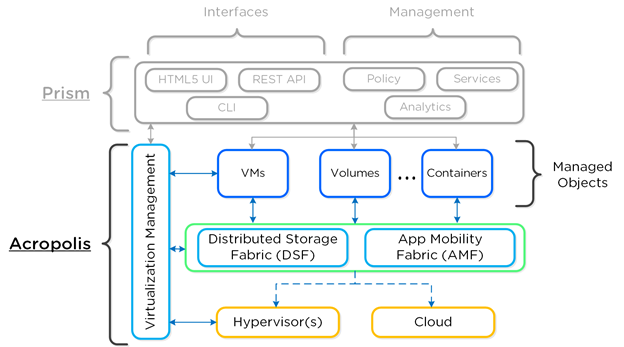

Acropolis

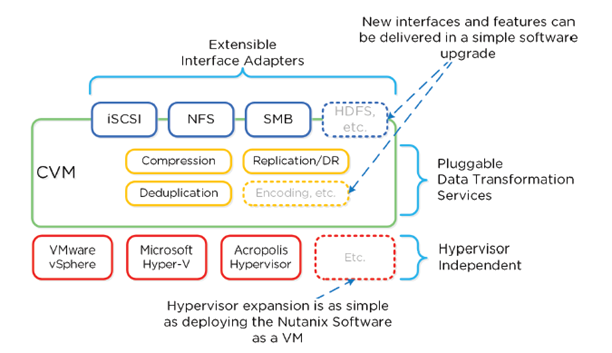

Acropolis is a distributed multi-resource manager, orchestration platform and data plane. It is broken down into the following three main components:

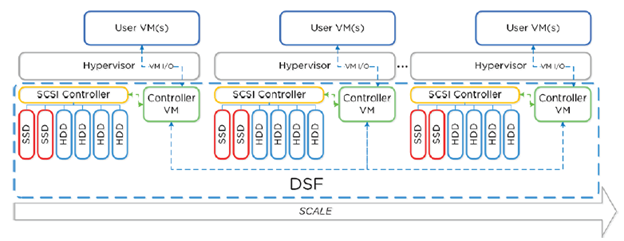

Distributed Storage Fabric (DSF)

- This is at the core and birth of the Nutanix platform and expands upon the Nutanix Distributed Filesystem (NDFS). NDFS has now evolved from a distributed system pooling storage resources into a much larger and capable storage platform.

- Distributed multi-resource manager, orchestration platform, data plane

- All nodes form DSF

- Appears to Hypervisor as storage array

- All I/O’s are handled locally for highest performance

App Mobility Fabric (AMF)

- Hypervisors abstracted the OS from hardware, and the AMF abstracts workloads (VMs, Storage, Containers, etc.) from the hypervisor. This will provide the ability to dynamically move the workloads between hypervisors, clouds, as well as provide the ability for Nutanix nodes to change hypervisors.

Hypervisor

- A multi-purpose hypervisor based upon the CentOS KVM hypervisor.

- Acropolis service allows for workloads/resource management/provisioning/operations

- Seamlessly move workloads between hypervisors/clouds

Architecture

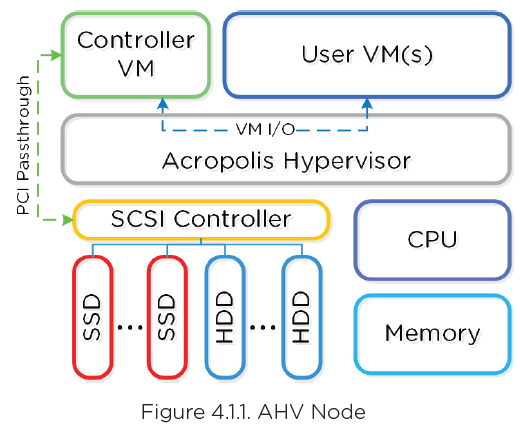

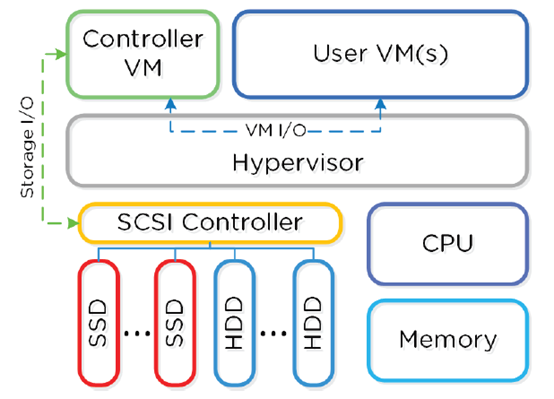

- CVM runs as a VM and disks are presented using PCI Pass-through

- Allows for full PCI controller to be passed to CVM and bypass hypervisor

- Based on CentOS

- Includes HA, live migration, etc.

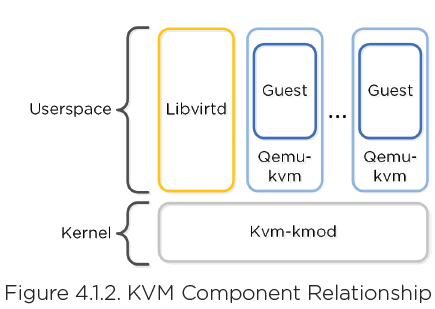

- KVM-kmod – kernel module

- Libvirtd – API, daemon, and management tool for managing KVM/QEMU.

- Communication between Acropolis/KVM/QEMU occurs through libvirtd.

- Qemu-kvm – machine emulator/virtualizer runs in userspace for every VM.

- Used for hardware assisted virtualization

- AHV has an EVC-like feature.

- Determines lowest processor generation in cluster and constrains all QEMU domains to that level

Scalability

- Max cluster size: N/A (same as Nutanix cluster)

- Max vCPU/VM: Number of physical cores/host

- Max mem/VM: 2TB

- Max VM/host: N/A (limited by mem)

- Max VM/cluster: N/A (limited by mem)

Networking

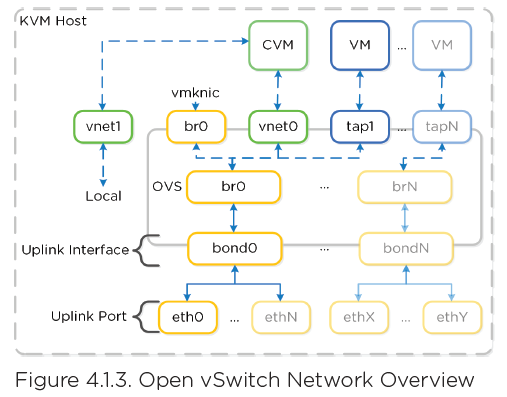

- Leverages Open vSwitch (OVS)

- Each VM NIC connected to TAP interface

- Supports Access and Trunk ports

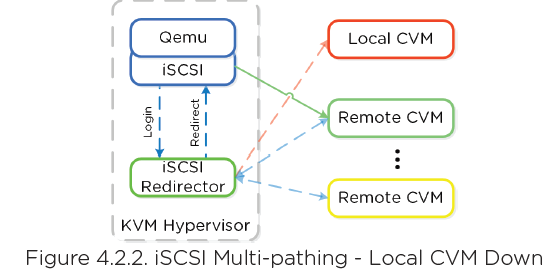

iSCSI Multipathing

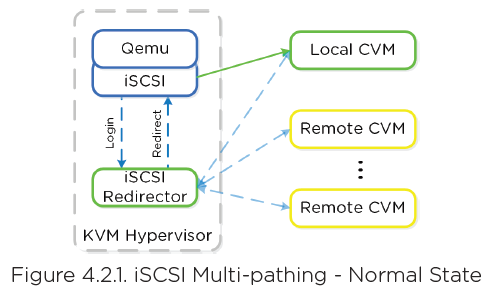

- Each host has a iSCSI redirector daemon running which checks Stargate health with NOP OUT commands

- QEMU configured with iSCSI redirector as iSCSI target portal

Redirector will redirect to healthy Stargate

- If Stargate goes down (stops responding to NOP OUT commands), iSCSI redirector marks local Stargate as unhealthy.

- Redirector will redirect to another healthy Stargate

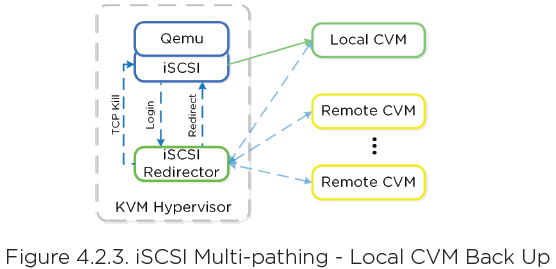

- Once local comes back, redirector will perform TCP kill and redirect back to local Stargate.

Troubleshooting iSCSI

- Check iSCSI Redirector logs: cat /var/log/iscsi_redirector

- In the iscsi_redirector log (located in /var/log/ on the AHV host), you can see each Stargate’s health:

2017-08-18 19:25:21,733 – INFO – Portal 192.168.5.254:3261 is up

2017-08-18 19:25:25,735 – INFO – Portal 10.3.140.158:3261 is up

2017-08-18 19:25:26,737 – INFO – Portal 10.3.140.153:3261 is up

- NOTE: The local Stargate is shown via its 192.168.5.254 internal address

- In the following you can see the iscsi_redirector is listening on 127.0.0.1:3261:

[root@NTNX-BEAST-1 ~]# netstat -tnlp | egrep tcp.3261

Proto … Local Address Foreign Address State PID/Program nametcp … 127.0.0.1:3261 0.0.0.0: LISTEN 8044/python

Frodo I/O Path (aka AHV Turbo Mode)

As storage technologies continue to evolve and become more efficient, so must we. Given the fact that we fully control AHV and the Nutanix stack this was an area of opportunity.

In short Frodo is a heavily optimized I/O path for AHV that allows for higher throughput, lower latency and less CPU overhead.

Controller VM (CVM)

- All I/O for host

- Doesn’t rely on hardware offloads or constructs for extensibility

- Deploy customer features via software

- Allows newer generation features for older hardware

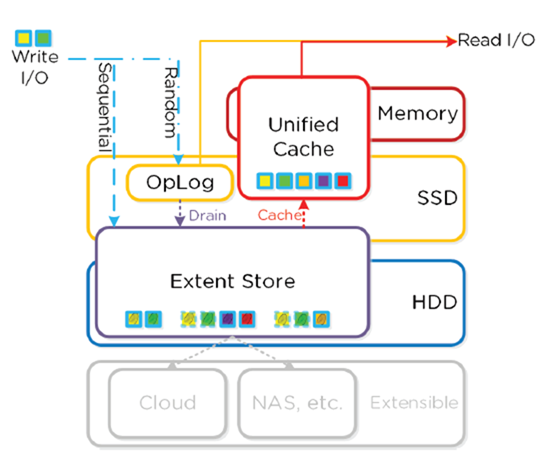

I/O Path and Cache

OpLog

- Staging area for bursts of random writes, stored on SSD Tier

- Writes are coalesced, and sequentially drains to extent store

- Synchronously replicates to other CVM’s OpLog’s before ack’ing write

- All CVM’s partake in replication

- Dynamically chosen based on load

- For sequential workloads, OpLog is bypassed (writes go directly to extent store)

- If data is in OpLog and not drained, all read requests fulfilled from OpLog

- When Dedupe is enabled, write I/O’s will be fingerprinted to allow them to be deduplicated based on fingerprints.

Extent Store

- Persistent bulk storage of DSF and spans SSD/HDD.

- Data is either :

- Being drained from OpLog

- Sequential in nature

- ILM determines tier placement

- Sequential = more than 1.5MB of outstanding write I/O

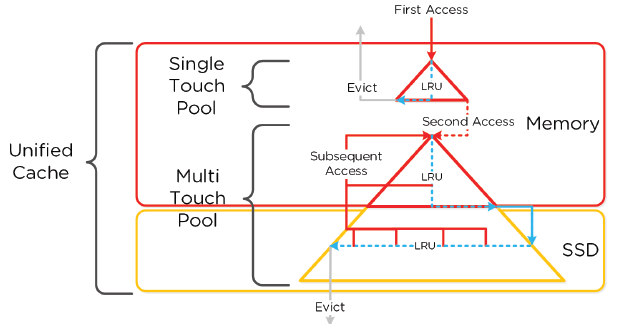

Unified Cache

- Deduplicated read cache spanning CVM memory + SSD

- Data not in cache = single-touch pool (completely in memory)

- Subsequent requests move to multi-touch pool (memory + SSD)

- Evicted downwards

Extent Cache

- In-memory read cache completely in CVM memory.

- Stores non-fingerprinted extents for containers where fingerprinting/dedupe are disabled

Prism

Nutanix Prism is the proprietary management software used in Nutanix Acropolis hyper-converged appliances. Nutanix Prism provides an automated control plane that uses machine learning to support predictive analytics and automated data movement.

Calm

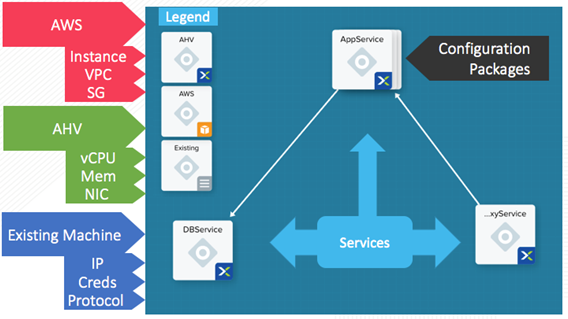

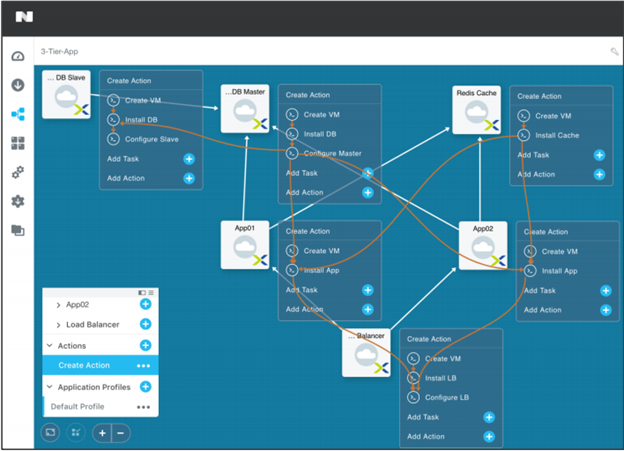

Calm is a multi-cloud application management framework delivered by Nutanix. Calm provides application automation and lifecycle management natively integrated into the Nutanix Platform. With Calm, applications are defined via simple blueprints that can be easily created using industry standard skills and control all aspects of the application’s lifecycle, such as provisioning, scaling, and cleanup. Once created, a blueprint can be easily published to end users through the Nutanix Marketplace, instantly transforming a complex provisioning ticket into a simple one-click request.

- Application Lifecycle Management: Automates the provision and deletion of both traditional multi-tiered applications and modern distributed services by using pre-integrated blueprints that make management of applications simple in both private (AHV) and public cloud (AWS).

- Customizable Blueprints: Simplifies the setup and management of custom enterprise applications by incorporating the elements of each app, including relevant VMs, configurations and related binaries into an easy-to-use blueprint that can be managed by the infrastructure team.

- Nutanix Marketplace: Publishes the application blueprints directly to the end users through Marketplace.

- Governance: Maintains control with role-based governance thereby limiting the user operations that are based on the permissions.

- Hybrid Cloud Management: Automates the provisioning of a hybrid cloud architecture, scaling both multi-tiered and distributed applications across cloud environments, including AWS.