Describe and differentiate Nutanix data protection technologies such as NearSync, Cloud Connect, and Protection Domains

NearSync

Building upon the traditional asynchronous (async) replication capabilities mentioned previously; Nutanix has introduced support for near synchronous replication (NearSync).

NearSync provides the best of both worlds: zero impact to primary I/O latency (like async replication) in addition to a very low RPO (like sync replication (metro)). This allows users have a very low RPO without having the overhead of requiring synchronous replication for writes.

This capability uses a new snapshot technology called light-weight snapshot (LWS). Unlike the traditional vDisk based snapshots used by async, this leverages markers and is completely OpLog based (vs. vDisk snapshots which are done in the Extent Store).

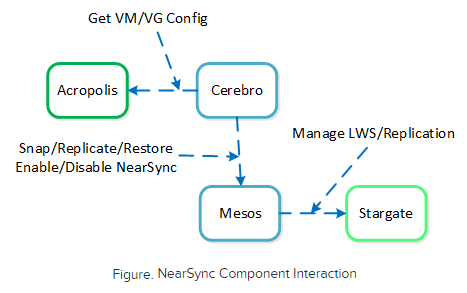

Mesos is a new service added to manage the snapshot layer and abstract the complexities of the full/incremental snapshots. Cerebro continues to manage the high-level constructs and policies (e.g. consistency groups, etc.) whereas Mesos is responsible for interacting with Stargate and controlling the LWS lifecycle.

The following figure shows an example of the communication between the NearSync components:

When a user configures a snapshot frequency <= 15 minutes, NearSync is automatically leveraged. Upon this, an initial seed snapshot is taken then replicated to the remote site(s). Once this completes in < 60 minutes (can be the first or n later), another seed snapshot is immediately taken and replicated in addition to LWS snapshot replication starting. Once the second seed snapshot finishes replication, all already replicated LWS snapshots become valid and the system is in stable NearSync.

In the event NearSync falls out of sync (e.g. network outage, WAN latency, etc.) causing the LWS replication to take > 60 minutes, the system will automatically switch back to vDisk based snapshots. When one of these completes in < 60 mintues, the system will take another snapshot immediately as well as start replicating LWS. Once the full snapshot completes, the LWS snapshots become valid and the system is in stable NearSync. This process is similar to the initial enabling of NearSync.

Some of the advantages of NearSync are as follows.

- Protection for the mission-critical applications. Securing your data with minimal data loss in case of a disaster, and providing you with more granular control during the restore process.

- No latency or distance requirements that are associated with fully synchronous replication feature.

- Allows resolution to a disaster event in minutes.

- To implement the NearSync feature, Nutanix has introduced a technology called Lightweight Snapshots (LWS) to take snapshots that continuously replicates incoming data generated by workloads running on the active cluster. The LWS snapshots are created at the metadata level only. These snapshots are stored in the LWS store, which is allocated on the SSD tier. LWS store is automatically allocated when you configure NearSync for a protection domain.

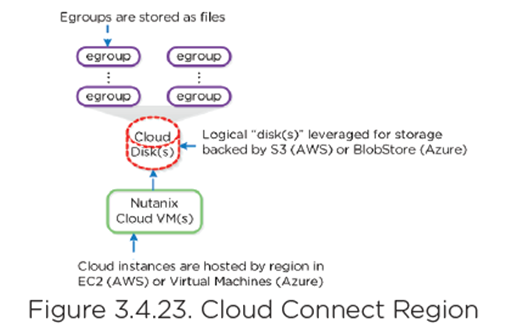

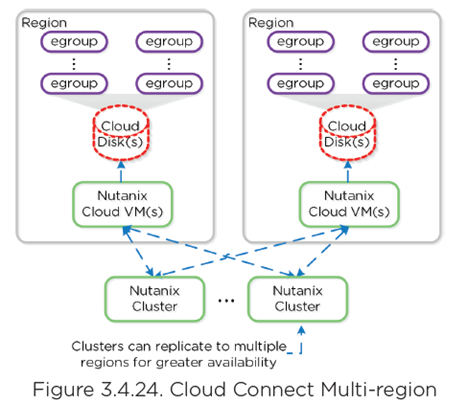

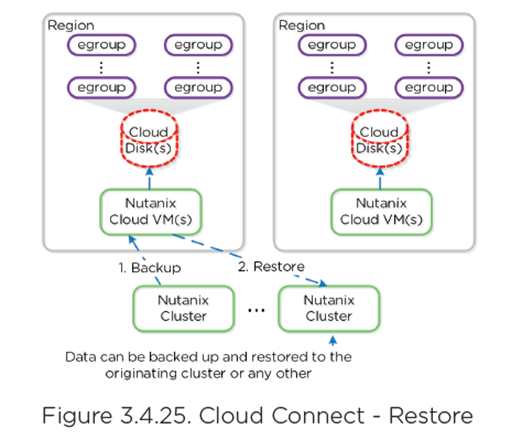

Cloud Connect

- Extends to cloud providers

- Only AWS, Azure

- Cloud remote site is spun up based on Acropolis

- All native capabilities available

- Storage performed with “cloud disk”. Logical disk backed by S3 or BlobStore.

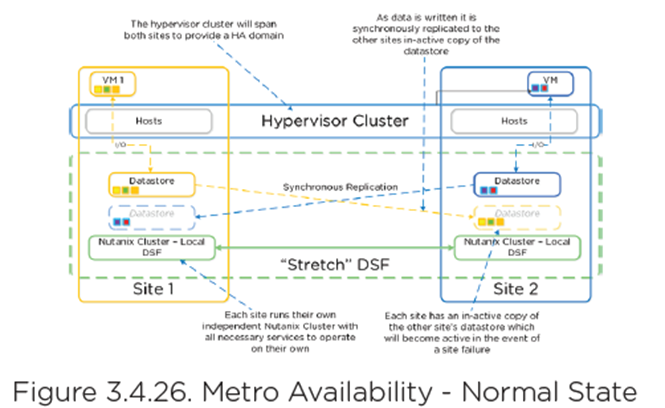

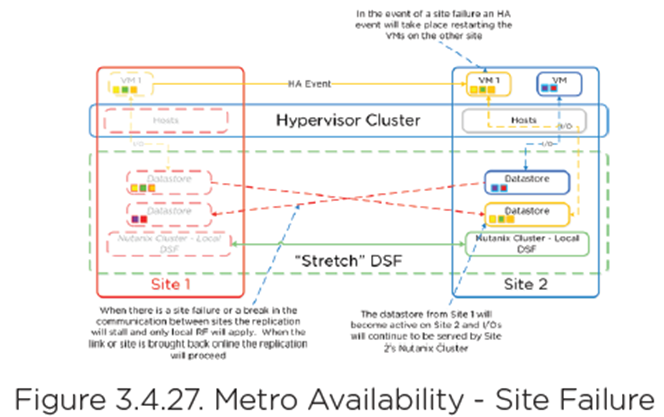

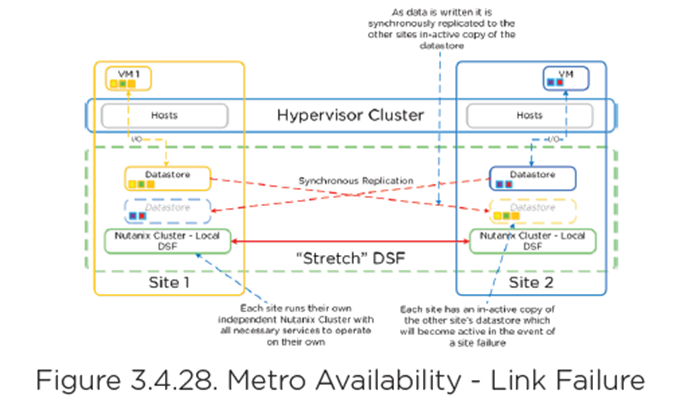

Metro Availability

- Compute spans two locations with access to shared pool of storage (stretch clustering)

- Synchronous replication

- Acknowledge writes

- If link failure, clusters will operate independently

- Once link is re-established, sites are resynchronized (deltas only)

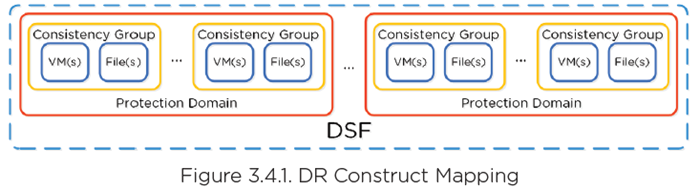

Protection Domains

- Macro group of VM’s/files to protect

- Replicated together on schedule

- Protect full container or individual VMs/files

- Tip: create multiple PDs for various service tiers driven by RTO/RPO.

Consistency Group (CG): subset of VMs/files in PD crash-consistent

- VMs/files part of PD which need to be snapshotted in crash-consistent manner

- Allows for multiple CG’s

Snapshot Schedule: snap/replication schedule for VMs in PD/CG

Retention Policy: number of local/remote snaps to retain.

- Remote site must be configured for remote retention/replication

Remote Site: remote cluster as target for backup/DR

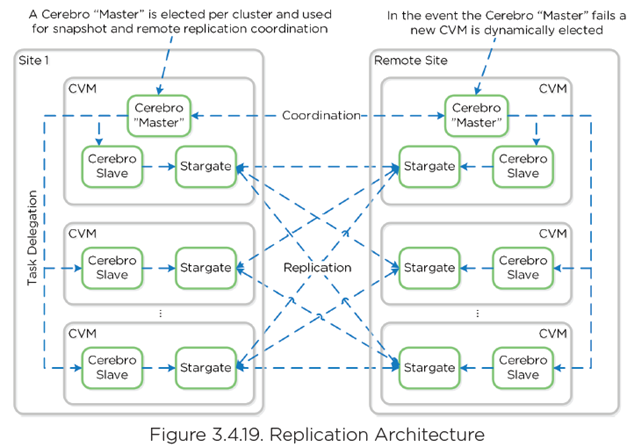

Replication and Disaster Recovery

- Cerebro manages DR/Replication

- Runs on every node with one master

- Accessed via CVM:2020

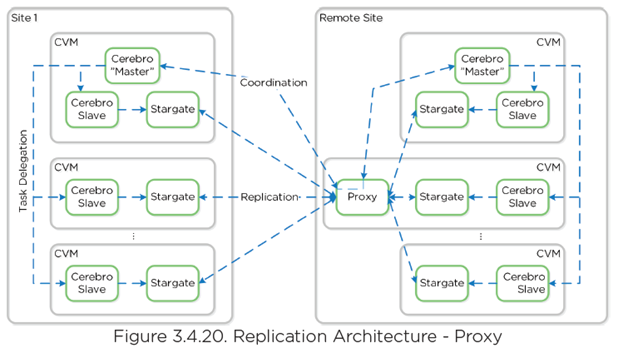

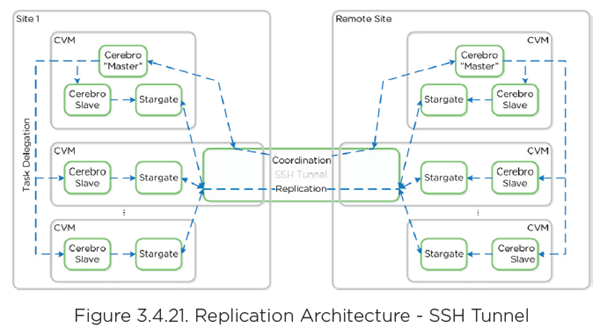

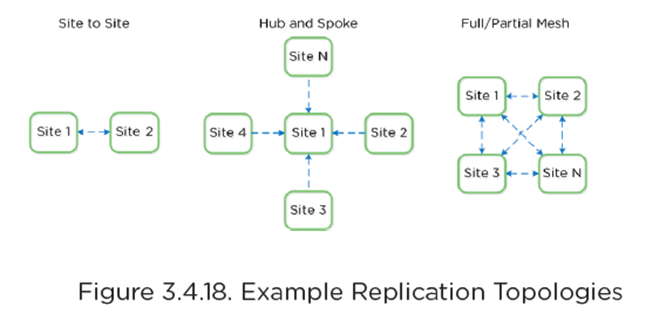

Replication Topologies

Replication Lifecycle

- Leverages Cerebro

- Manages task delegation to local Cerebro slaves/coordinates with Cerebro master

- Master determines data to be replicated

- Tasks delegated to slaves

- Slaves tell Stargate what to replicate/where

- Extent reads on source are check summed to ensure consistency

- New extents check summed at target