Identify the need for Flash mode/AHV Turbo for VM storage or performance tuning

What is Flash Mode?

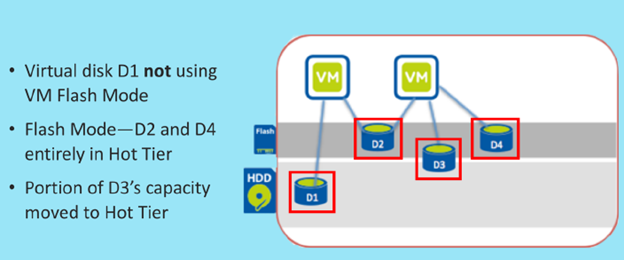

Flash Mode is a feature that will guarantee an entire virtual machine (VM) virtual disk or a portion of a VM virtual disk always resides in the hot tier. VM Flash Mode is also referred to as VM/vDisk Pinning

- Up to 25% of the SSD tier of the entire cluster can be reserved as flash mode for VMs or VGs.

- All virtual disks attached to the VM are automatically migrated to the SSD tier.

- Any subsequently added virtual disks to this VM stay on the SSD tier.

- The VM configuration can be updated to remove the flash-mode from any virtual disk.

- Hypervisor Agnostic

Flash Mode Use Cases

- Latency-sensitive applications

- Workloads that initiate data migrations to the cold tier during jobs

- Activate for Read Workloads. Write-intensive workloads will hit SSD either via:

- Random writes that go to SSD because of oplog

- Sequential writes that go to hot tier in extent store.

VM Flash Mode should be used as a last resort, as it reduces the ability of the DSF to manage workloads in a dynamic manner.

Precautions

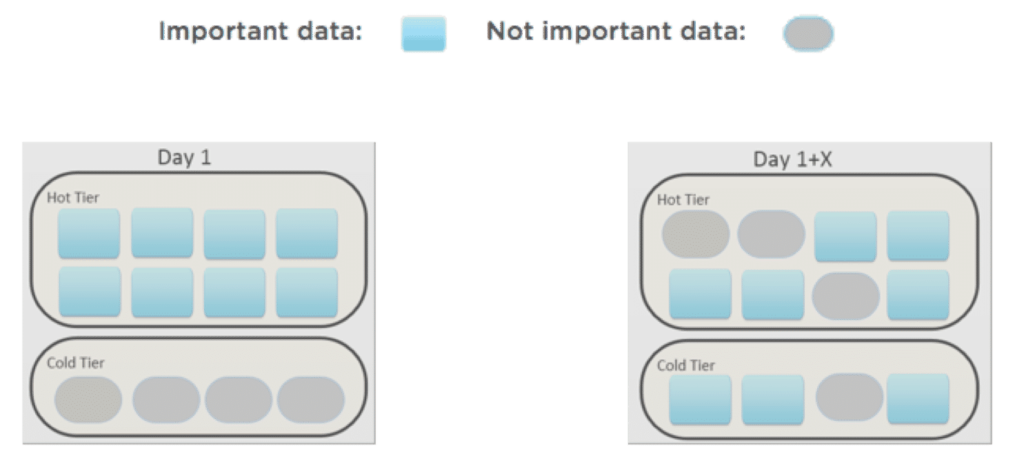

- VM Flash Mode increases the performance of the virtual disks attached to the VM, but can lower the performance of the VMs on which this feature is not enabled.

- Make sure to perform a thorough analysis of the potential performance issues before enabling this feature.

- VM/VG Flash Mode does not restrict the usage for the VM or VG on the hot tier; if space is available on the hot tier, more data from the VM or VG can reside on the hot tier.

- If Erasure Coding is enabled on the cluster, the parity always resides on the HDD.

- Do not use Flash Mode with capacity tier deduplication.

Per-Virtual Machine Virtual Disk Level

- The virtual disks and VM Flash Mode can be configured on existing virtual machine virtual disks or on newly created virtual disks.

- If enabling VM Flash Mode on an existing virtual disk with data residing on the cold tier, the ILM mechanism migrates the data to the hot tier, rather than the VM Flash Mode feature.

- VM Flash Mode can be configured for the entire virtual disk (or disks) or a portion of the virtual disk, specified using GB.

- DSF assigns data to the hot tier based on a First-In First-Out (FIFO) basis when using the portion configuration option.

- If SSD space is available, data in excess of the minimum VM Flash Mode configuration requirements can reside in the hot tier.

- ILM does not migrate data for powered off virtual machines using VM Flash Mode to the cold tier.

Node Failure Scenario

- During a node failure scenario, the available SSD capacity is reduced.

- This can lead to a situation where more than 25 percent of the SSD tier is pinned to SSD by VM Flash Mode. This may result in alerts in Prism. Example:

- 4 Nutanix nodes provide 600 GB VM Flash Mode SSD capacity.

- 500 GB are used by VM Flash Mode.

- 1 Nutanix node fails and only 450 GB is available for VM Flash Mode.

- This can lead to a situation where more than 25 percent of the SSD tier is pinned to SSD by VM Flash Mode. This may result in alerts in Prism. Example:

- When the failure scenario occurs, the soft limit increases to 50% (900 GB instead of 450 GB) of total SSD capacity.

- Thus data will not be migrated from the hot tier to the cold tier.

- DSF migrates data from the hot to cold tier during a node failure when VM Flash Mode uses more than 50% (900 GB) of the SSD space.

Unaffected by VM Flash Mode

- In heterogeneous Nutanix clusters, when VM Flash Mode places a virtual machine virtual disk entirely in hot tier it could fail a write I/O due to unavailable SSD space.

- Clone: A newly-cloned virtual disk does not preserve the VM Flash Mode configuration. The VM Flash Mode configuration applies only to the original virtual disk. You must manually configure VM Flash Mode for the newly-cloned virtual disk to take the advantage of the feature.

- Compression: Does not affect VM Flash Mode. The DSF compression feature reduces the amount of SSD space used.

- Deduplication: Does not affect VM Flash Mode. Deduplication reduces the amount of SSD space used.

- Snapshot: A DSF VM snapshot preserves the virtual disk VM Flash Mode configuration. This configuration is not preserved when restoring a DSF VM snapshot, however, which means you must configure VM Flash Mode for the restored virtual disk to take advantage of the feature.

- Replication: Remote copies will not have VM Flash Mode enabled. The original virtual disk keeps its VM Flash Mode configuration.

- Disaster Recovery: Remote copies will not have VM Flash Mode enabled. The original virtual disk keep its VM Flash Mode configuration. A restore operation does not preserve the VM Flash Mode configuration.

- Live Migration/vMotion: Does not affect VM Flash Mode configuration.

- Storage Migration/Storage vMotion: The new virtual disk created on the target datastore will not have VM Flash Mode enabled. Configure VM Flash Mode for the newly created virtual disk to take the advantage of the feature

- Metro Availability: VM Flash Mode configuration will not be propagated to the VM running in the second Nutanix cluster.

Monitoring Flash Mode

arithmos_cli master_get_entities entity_type=vmfilter_criteria=controller.total_pinned_vdisks=gt=0

Modify Flash Mode

Enable/disable flash mode for VG:

acli vg.update <vg_name> flash_mode=<true/false>

Remove Flash Mode for particular disk:

acli vm.disk_update <vg_name> <index_value> flash_mode=<false>

AHV Turbo Mode

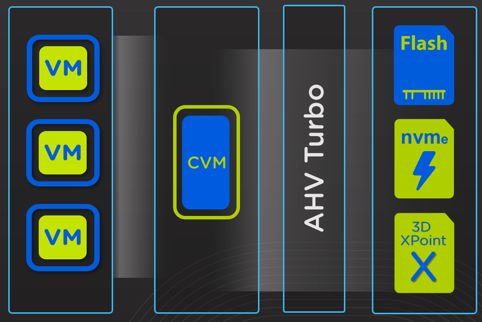

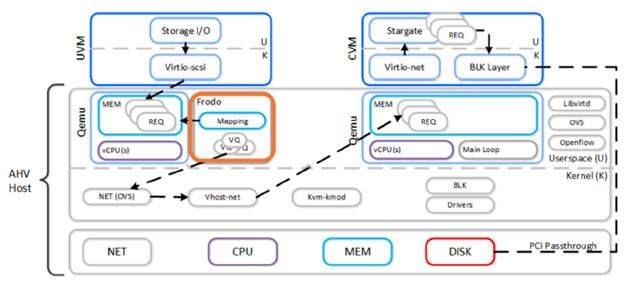

AHV Turbo mode is a highly optimised I/O path (shortened and widened) between the User VM (UVM) and Nutanix stargate (I/O engine).

In-kernel being better for performance is just a myth, Nutanix has achieved major performance improvements by doing the heavy lifting of the I/O data path in User Space, which is the opposite of the much hyped “In-kernel”.

Turbo mode allows for multiple queues per disk device(s), which results in performance improvements and other benefits:

- Hypervisor CPU overhead reductions of up to 25%

- 3x improved performance compared to Qemu

- Eliminates the need for multiple PVSCSI adapters and spread disks across controllers

- Eliminates bottlenecks associated with legacy

hypervisors, such as VAAI Atomic Test and Set (ATS)

- Every vDisk has its own queue (not one per datastore/container)

- Enhanced by adding a per-vCPU queue at the virtual controller level.

AHV Turbo Requirements

- User VM’s must have greater than 2 vCPU when powered on

- 1vCPU = 1 Frodo thread/session per disk device

- >=2vCPU = 2 Frodo threads/sessions per disk device

- Windows VM’s must be using the latest Nutanix VirtIO driver package

- Linux VM’s must have one of the following lines added to the kernel

sysctl –m scsi_mod.use_blk_mq=y

sysctl –m scsi_mod.use_blk_mq=1

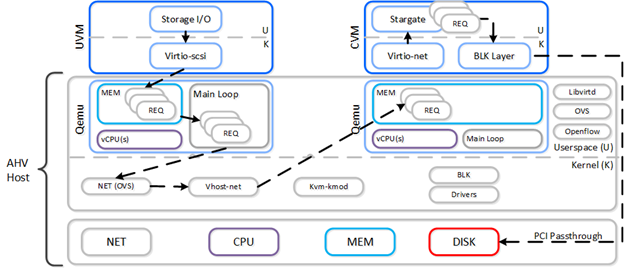

When a VM performs an I/O it will perform the following

- VM’s OS perform SCSI command(s) to virtual device(s)

- Virtio-scsi takes those requests and places them in the guest’s memory

- Requests are handled by the QEMU main loop

- Libiscsi inspects each request and forwards

- Network layer forwards requests to local CVM (or externally if local is unavailable)

- Stargate handles request(s)

The following path does looks similar to the traditional I/O except for a few key differences:

- Qemu main loop is replaced by Frodo (vhost-user-scsi)

- Frodo exposes multiple virtual queues (VQs) to the guest (one per vCPU)

- Leverages multiple threads for multi-vCPU VMs

- Libiscsi is replaced by our own much more lightweight version

The multi-queue I/O flow is handled by multiple frodo threads (Turbo mode) threads and passed onto stargate.