Share a Nutanix Datastore to a Non-Nutanix Cluster in vSphere

I had a need to mount a Nutanix datastore a non-Nutanix cluster in my environment for a migration, and ran into some issues. There are a lot of helpful articles out there for mouting Nutanix datastores via NFS, such as this one here. My dilemma is I need to have the Nutanix datastore presented in both clusters simultaneously.

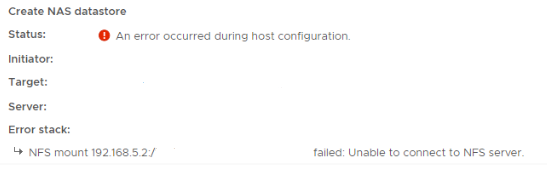

I configured the Storage Container with the appropriate whitelisted IP’s, and used the “Mount Datastore to Additional Hosts” option from the HTML5 client. This failed with “An error occurred during the host configuration”.

The Workaround

When digging a little deeper into the host Tasks, I see that the host is trying to mount using the 192.168.5.2 CVM IP address. As a non-routable IP, this is never going to mount. In order to get this to work, I will be implementing a non-supported workaround. The first step is to create a static route on the host to point the CVM IP address to the Cluster VIP:

esxcfg-route -a 192.168.5.2/32 192.168.1.75

So what is going on here? This is actually part of the Nutanix design to maintain availability during upgrades and/or failures of the CVM. A static route is injected into the ESXi host, which will re-route traffic to a working CVM within the cluster. When the CVM comes back online, the static route is removed, and I/O resumes as normal.

CVM Autopathing

What’s going on under the covers is a feature called “CVM Autopathing”. Here’s a nice explanation of it from the Nutanix Bible:

In the event of a CVM “failure” the I/O which was previously being served from the down CVM, will be forwarded to other CVMs throughout the cluster. ESXi and Hyper-V handle this via a process called CVM Autopathing, which leverages HA.py (like “happy”), where it will modify the routes to forward traffic going to the internal address (192.168.5.2) to the external IP of other CVMs throughout the cluster. This enables the datastore to remain intact, just the CVM responsible for serving the I/Os is remote.

In the context of this configuration, we are really just “tricking” the host into thinking that it has connectivity to a CVM within the cluster. By doing this we can mount the datastore using the local CVM IP address!

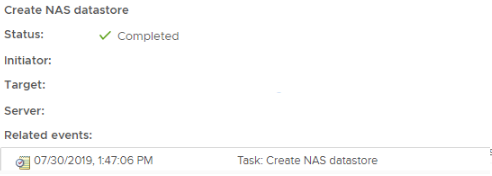

After adding in the static route, the “Mount Datastore to Additional Hosts” works as it should. I can now share my Nutanix Datastore in both my Nutanix, and non-Nutanix clusters!

I added the static route to all of my hosts in the cluster to ensure they could all access the datastore for availability. Once the migration was complete, I removed the static route. That can be accomplished with:

esxcfg-route -d 192.168.5.2/32 192.168.1.75

Hi there,

This was something exactly what i was attempting to do. The logic is spot on and makes sense. However upon implementing this I noticed that as soon as I add the static route (using the esxcfg-route -a command), few seconds later the route simply gets removed.

Issuing a esxcfg -l does NOT show the added route. Did you run into this by chance?

I can confirm your method truly works. I was able to add the route and within the 2-3 second mark I quickly mounted the Nutanix NFS datastore and lo and behold it worked!

However the route got deleted thereafter and after few minutes my datastore showed as “inaccessible” (expected).

Trying to see how I can get that route to “stick”…also not sure why its getting deleted automatically.

Hello, thank you for the feedback! I can’t say that I’ve seen the route disappear like that. I’ll do some testing in my lab to see if I can reproduce the issue.