vSAN Cluster Partition

While performing some firmware updates on my vSAN enabled hosts, we noticed some vSAN related alarms firing off. The one in particular that was bothering me was the Network Health – vSAN Cluster Partition. Why? Well, let’s take a look at what that means.

All vSAN hosts should be able to communicate metadata updates over both multicast and unicast (strictly unicast as of vSAN 6.6). If hosts are unable to communicate between each other, the cluster will be split into multiple network partitions. This can make vSAN objects unavailable until the misconfiguration is resolved.

The first step is to rule out networking. I ran a vmkping to/from my partitioned host over the vSAN vmkernel port:

vmkping -I vmk5 -s 8192 -d 10.112.23.15

The -I specifics the interface we’re using. In my case, vSAN is using vmkernel port vmk5. The -s specifies the packet size. Since I’m using jumbo frames, I set it to 8192. And the -d states to disable fragmentation.

No errors with networking. Time for a VMware support ticket!

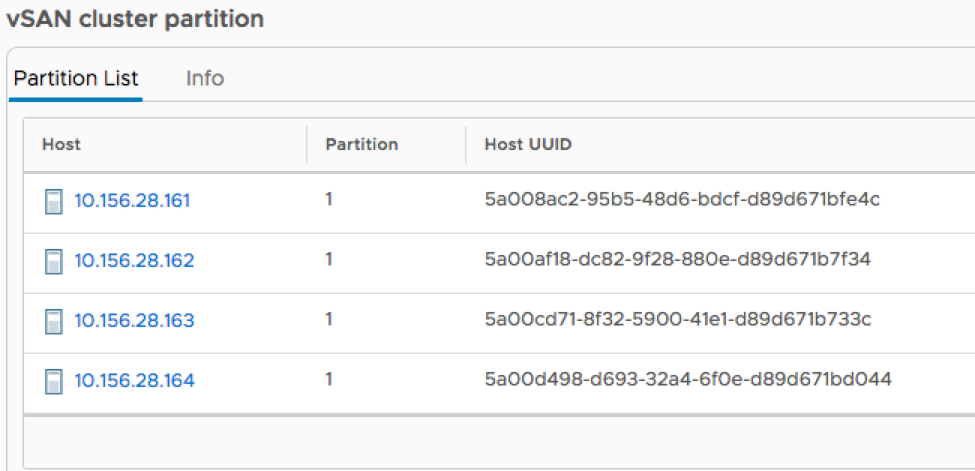

After some initial troubleshooting, the engineer was able to confirm a network partition with the following command: esxcli vsan cluster get. The Sub-Cluster Member Count did not reflect all hosts in my cluster. Further troubleshooting revealed that we needed to manually rebuild the vSAN Unicast agent list. This is verified with esxcli vsan cluster unicastagent list. You should have an entry for each host in the cluster. If you do not, there’s a problem!

Prep Work

The first step is to gather the node UUID’s, and vSAN vmkernel port IP addresses. So open up an SSH session to every host in the cluster! For the UUID, use cmmds-tool whoami and copy the output into a notepad. Next, gather the vSAN vmerkernel IP with esxcli network ip interface get | grep vmk# (replace with your vmk number), and copy this into a notepad. Rinse and repeat with each host in the cluster. This will let me build my command lists.

Note: I created two lists. The first contained all of the UUID’s for each host in the cluster (with the exception of the partitioned host). This list will be executed on the partitioned host, which will add every hosts unicastagent to its table. The second list is for the partitioned host only. This list will be executed on every other hosts in the cluster to add the partitioned hosts unicastagent to their tables.

List for Non-Partitioned Hosts:

esxcli vsan cluster unicastagent add -t node -u 931c6ds5-09e2-42fb-b7f4-4994d72b401b -U true -a 10.112.23.15 -p 12321 esxcli vsan cluster unicastagent add -t node -u 931cff25-a54b-40ab-8695-481b42cccc19 -U true -a 10.112.23.16 -p 12321 esxcli vsan cluster unicastagent add -t node -u 931cs325-dfaa-4e27-b38c-65dffb5c03ba -U true -a 10.112.23.17 -p 12321 esxcli vsan cluster unicastagent add -t node -u 931c6ds5-b00a-4231-9ea5-f99106996ac7 -U true -a 10.112.23.18 -p 12321 esxcli vsan cluster unicastagent add -t node -u 931c63fa-64ce-436d-95d2-e49e71f99350 -U true -a 10.112.23.20 -p 12321

List for Partitioned Host:

esxcli vsan cluster unicastagent add -t node -u 931c632d-49e7-4cce-9905-e2f36ccfed87 -U true -a 10.112.23.19 -p 12321

Execution

Prior to executing the commands, we need to stop updates to the vSAN cluster via vCenter. I actually used this command during another blog post.

esxcli-advcfg -s 1 /VSAN/IgnoreClusterMemberListUpdates

Setting this to “1” tells vCenter to stop managing the vSAN cluster. This allows me make changes without being overridden by vCenter.

Now I can execute the command lists on the hosts. Execute each command from the Non-Partitioned Hosts list on the Non-Partitioned hosts. Likewise, do the same for the Partitioned Host.

Once completed, execute esxcli vsan cluster unicastagent list on each host in the cluster again and the correct number of hosts should be listed. Re-enable Cluster member List Updates:

esxcli-advcfg -s 0 /VSAN/IgnoreClusterMemberListUpdates

Validate!

Retest the Skyline Health, and those vSAN alarms should go away!