Define and differentiate Acropolis Block Services (ABS) and Acropolis File Services (AFS)

Acropolis Block Services (ABS)

- Exposes backend DSF to external consumers via

iSCSI

- Use Cases:

- Constructs:

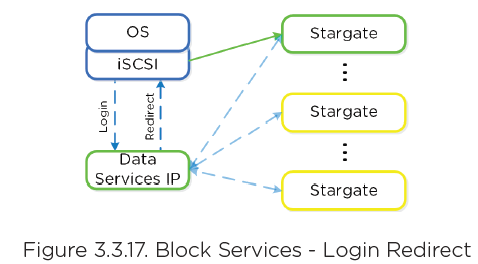

- Data Services IP: Cluster Wide VIP for iSCSI

logins

- Volume Group: iSCSI target/group of disk

devices

- Disks: Devices in Volume Group

- Attachment: Permissions for IQN access

- Backend = VG’s disk is just a vDisk on DSF

Path

High-Availability

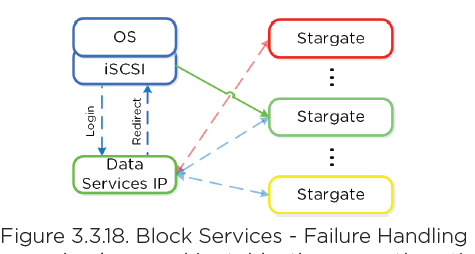

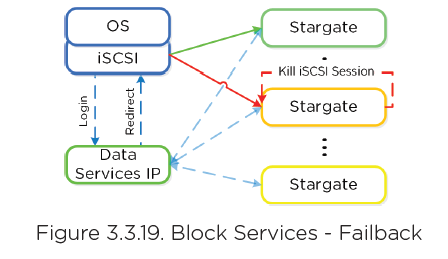

- Data Services VIP leveraged for discovery

- Single address without knowing individual CVM IP’s

- Assigned to iSCSI master

- In event of failure, new master elected with address

- With this, client side MPIO is not needed

- No need to check “enable multi-path” in

Hyper-V

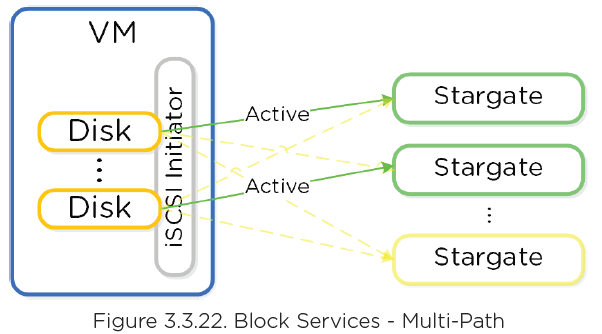

Multi-Pathing

- iSCSI protocol mandates single iSCSI session/target

- 1:1 relationship from Stargate to target

- Virtual targets automatically created per attached initiator and assigned to VG

- Provides iSCSI target per disk device

- Allows each disk device to have its own iSCSI session, hosted across multiple Stargates

- Load balancing occurs during iSCSI session

establishment per target

- Performed via hash

- TRIM supported (UNMAP)

Acropolis

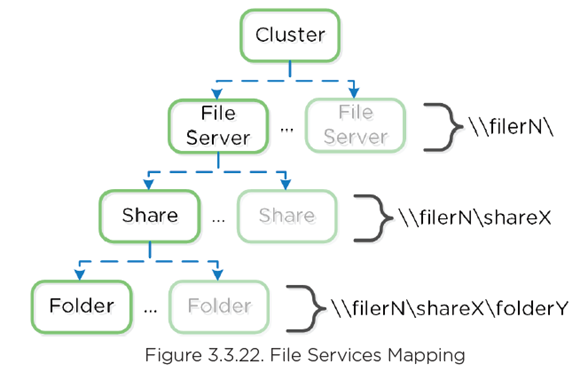

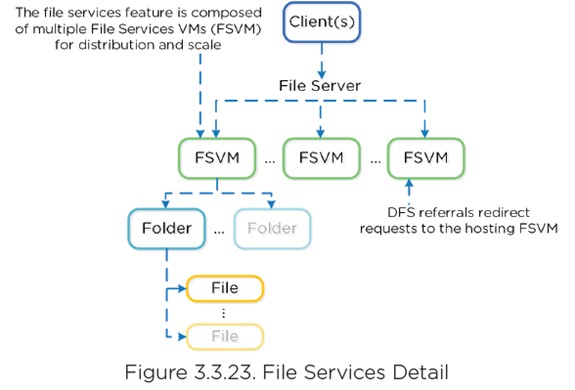

File Services (AFS)

- SMB is only supported protocol

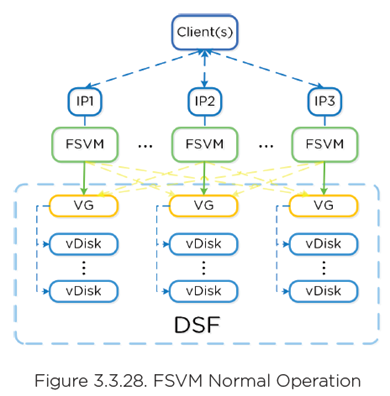

- File Services VMs run as agent VM’s

- Minimum of 3 VM’s deployed by default for scale

- Transparently deployed

- Integrated into AD/DNS

- During install, the following are created:

- AD SPN for file server and each FSVM

- DNS for FS pointing to all FSVM(s)

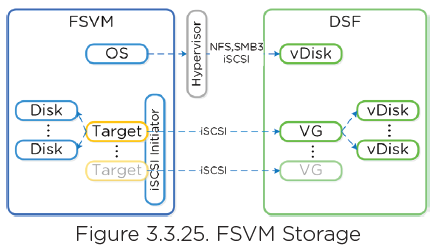

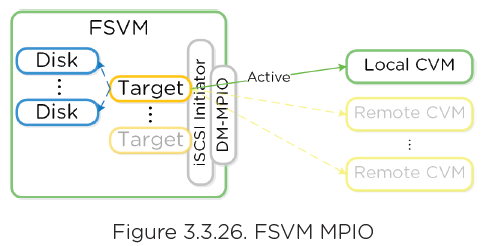

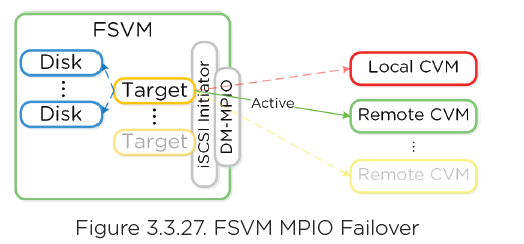

- Each FSVM uses Acropolis Volumes API accessed via ingest iSCSI

- Allows FSVM to connect to any iSCSI target in event of outage

- For availability in Linux, DM-MPIO (Linux Multipathing) is leveraged within FSVM with active path set to Local CVM.

- In event of failure, DM-MPIO will active failover path on remote CVM.

- Each FSVM will have IP which clients use to communicate to FSVM as part of DFS.

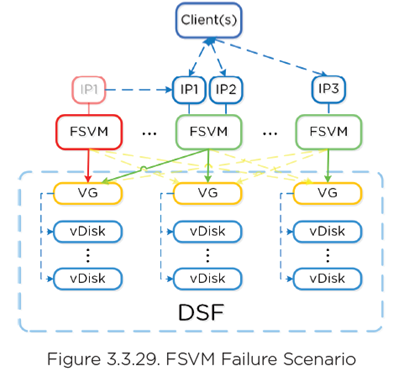

- In event of failure, VG and IP of failed FSVM will be taken over by another FSVM:

- When failed FSVM comes back and is stable, it

will re-take its IP and VG.